|

|

I have noticed that sometimes, if I glance at a spinning car wheel—particularly if it has sharply defined spokes—the wheel will appear to freeze or stop for just an instant. Somehow my retinas, my optic nerves, my brain’s visual cortex, my sensory processing center, or everything all together is able to extract the spoke pattern from the spinning blur and visualize it. Similarly, in a crowded restaurant, I will sometimes suddenly hear a distinct word or phrase pop out of the babble of many voices.

More often, I think, the reverse is true. We see a shadow move in our peripheral vision and interpret it as the threat from an animal, a stalker—or a ghost. Or we glimpse in the dappled shade of a streetlight through tree leaves an upright form that is just out of focus—a Post Office box perhaps, or a phone junction cabinet—and we interpret the shape as a human being waiting to meet or ambush us. Or we hear the wind in the trees, or the rush of hot air from a furnace register, and interpret it as a voice trying to tell us something meaningful.1

Perception is a funny thing. We are forced to trust our senses in order to navigate through life, and yet we must be continually on guard to interpret correctly what we see, feel, touch, and smell. Otherwise we might drive off the road at every shifting shadow, scudding piece of paper, or flashing light. Anyone who has worked with a skittish horse knows that it can be incredibly shy of the slightest peripheral movement that might be hostile. In an animal that is vulnerable and virtually defenseless against its predators, and whose main safety lies in evasion and speed—a category which includes unarmed, naked humans as well as horses—this dive away from the unknown is a pure survival trait.2

So we need to be on guard lest we appear defensive and foolish in polite company. The trouble is, our sensory perceptions are too closely linked to the cortical functions of analysis and interpretation. We don’t have a standard reference with which to compare what we see, hear, and feel. And we don’t have much of a pause function between perception and interpretation which would allow us to gather more data, examine possible causes, and reach a leisurely conclusion.

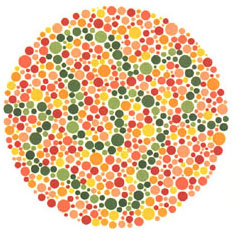

We also need to be aware of differences in perception between people. So a room that feels hot to some may be cold to others. And color perception is notoriously tricky. For example, I have satisfactory color vision and can tell a ripe from an unripe tomato by its color, or the difference between a red light and a green one. But as a teenager I was tested for color blindness using the Ishihara plates—those circles with a pebbled pattern, out of which a number only appears if you are seeing the colors correctly. I miss about half of them, resulting in an early diagnosis of “confusion with tints.” And I can verify this in everyday life. Sometimes a color that other people call “maroon” I will see as “brown.” Sometimes what everyone calls a brown sweater will look green to me. I don’t consider this a disability—but please don’t ask me to dress myself in subtle colors or send me out to buy wall paint.

This is why, in order to progress in science, we had to invent instruments that have no perceptual or interpretive function. A microscope might let you see something incredibly small, but it still won’t keep you from confusing, say, diseased and healthy cells, or differentiating among the various types of bacteria, at least without special training and reference materials. That’s why most modern instruments like temperature sensors, weigh scales, spectrophotometers, and the like include a reference standard, a calibration step, or a diagnostic procedure. We know the job the machine was designed to do, but we also need to know if it is functioning normally and accurately.

And yet, when you aggregate the results of many such machines generating thousands or millions of data points, you can still make mistakes. We can’t always trust the results of those supposedly impartial instruments. For example, the recent pause in measured global warming since 1998—which none of the current climate change models either predicted or can explain—is now attributed to our misreading of surface temperature data.3 And the National Oceanic and Atmospheric Administration (NOAA) has routinely been making adjustments to the raw temperature data in the continental U.S. for the period preceding this supposed hiatus.4

Questions of interpretive bias, sloppy science, and cheating aside, can we—even with the best will in the world and the highest commitment to accuracy—truly know what’s going on in the world around us?

I believe we can know—but only in the long run and after much discussion, consideration, and reinterpretation. In the short term, however, our approach must still be “trust, but verify.” For science, like every other human activity, is run by fallible individuals. They can make mistakes, see what they want to believe, and let good intentions get in the way of hard data. And if you put your faith in committees, associations, and government bureaucracies to level the playing field and leaven the results, remember that most of these groups are either democratized to represent and respond to all voices, or they tend to fall under the sway of a powerful, prestigious, and authoritarian leader. If the group is democratized, think of how purely logical and abstractly accurate the pronouncements of such organizations usually are. If authoritarian, remember that one man or woman, regardless of intellect or knowledge, can still make mistakes.5

In all of this, we might hope for a significant advancement in accuracy with the development of artificial intelligence. But will that really help? Will a machine intelligence be cool, calculating, and free of bias? Certainly a modern computer has those characteristics—except that every computer made today was programmed by a human being, either at the level of data interpretation and system modeling, or in the application design, or down at the operating system level. And for good reason the mantra of every computer programmer is “Garbage in, garbage out.”

But what about a machine that programs itself? Well, such a machine is not going to be simple. It will not develop its programming in isolation and focus its intelligence on a single problem solution or data set. A machine that programs the development of its own knowledge and capability is going to be like a human being who learns from what he or she reads and experiences, governed by subtle adjustments in his or her previous experiences, attitudes, likes and dislikes, and—yes—assumptions and prejudices. A machine that approximated human-scale intelligence would of necessity be formed from wide experience that incorporated many examples which might be either compatible or conflicting, involved comparisons drawn from both accurate and skewed data sets—here, think “apples and oranges”—and included many unanswered and perhaps unanswerable questions. The more powerful such a machine intelligence became, the more imaginative, associational, probabilistic, and provisional—here, think “inclined to guess”—it would become. If you doubt this, consider the IBM computer “Watson,” which beat its human competitors on Jeopardy! but could still make some egregious errors.6

If anything, perceptual errors and errors of analysis and interpretation are simply part of the human condition—no matter whether that condition reflects human or machine evolution and thinking. We all surf an erratic wave of slippery data, and the best we can do is continually check ourselves and try to hold on to our common sense.

1. Other senses can be unreliable, too. The olfactory nerves can go haywire just before a seizure, subjecting the patient to familiar smells—sometimes pleasant, sometimes not, but never actually related to the current environment. Seizure auras can also be visual and auditory. Similarly, our sense of balance—the inner ear’s appreciation of the pull of gravity—can be fuddled by changes in blood pressure, infections, certain medications, or a head injury.

2. As a motorcyclist, I must be constantly aware of surrounding traffic. One of the greatest dangers is drivers in the next lane moving sideways into my space, either through a conscious lane change or unconscious drift. My sense of this is so finely attuned that I tend to react to any object which my peripheral vision perceives as headed on a course that intersects with mine. Since people in other lanes may only be moving safely into the lane beside me, I must consciously monitor this reaction and not take violent evasive action when a car or truck two lanes away starts crossing my perceived path.

3. See Thomas R. Karl et al., “Possible artifacts of data biases in the recent global surface warming hiatus,” Science, June 26, 2015.

4. See the National Oceanic and Atmospheric Administration’s page Monitoring Global and U.S. Temperatures at NOAA's National Centers for Environmental Information for a discussion of adjustments to the U.S. surface temperature record.

5. A meme going the rounds on Facebook quotes Richard Feynman: “It doesn’t matter how beautiful your theory is, it doesn’t matter how smart you are. If it doesn’t agree with experiment, it’s wrong.” To which I reply: Yes, but be sure to check the design and execution of that experiment. Some experiments designed to test one thing end up actually testing and trying to prove something else.

6. See “Watson Wasn’t Perfect: IBM Explains the ‘Jeopardy!’ Errors,” Daily Finance, February 17, 2011.