|

|

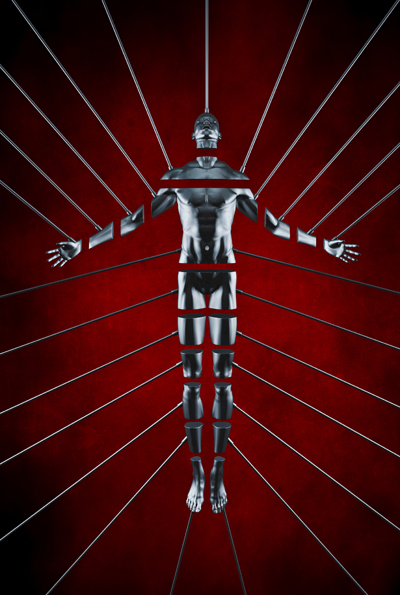

I have been writing fiction about artificial intelligence (AI) for most of my adult life.1 In all cases, my intelligences—whether a viral computer spy or a robot pilot from the 11th millennium—are what one science fiction author calls “a little man with a machine hat.” That is, they are multi-capable, self-aware programs able to function like a human being, carry on conversations, have thoughts and opinions, and occasionally tell jokes. The only difference is they aren’t made out of gooey carbon compounds. That is, they’re just another set of fictional characters.

With all the talk and all the hype about AI these days, it is useful to understand what the current crop of programs is and is not. They are not Skynet, “deciding our fate in a microsecond.” They are not functionally equivalent to human intelligence: that is, they are not thought processors capable of thinking through complicated, real-life situations, perceiving implications, and making distinctions and decisions. They do not have a lifetime of experience or what we humans would call “common sense.” They are ambitious children. And they are not all that intelligent. They are also designed—at least for now—for a single function and not the generalized array of capabilities we think of as comprising human-scale intelligence.

I recently heard an interview with Chairman and CEO Arvind Krishna of IBM. He said that programming the Watson computer that became a Jeopardy champion took six months. That was a lot of work for a machine to compete in the complicated but essentiallyt trivial task of becoming a game show contestant. IBM is now selling the Watson model as a way for corporations to analyze their vast amounts of data, like aircraft maintenance records or banking operations. Artificial intelligence in these applications will excel, because computers have superhuman scales of memory, analytical capability, and attention span. But Krishn cautioned that in programming an artificial intelligence for corporate use, the operators must be careful about the extent and quality of the database it is fed. In other words, the programmer’s maxim still holds true: “garbage in/garbage out.”

I can imagine that AI systems will take on large sets of data for corporate and eventually for personal use. They will manage budgets, inventories, supply chains, operating schedules, contract formation and execution, and other functions where the data allow for only a limited number of interpretations. They will be very good at finding patterns and anomalies. They will do things that human minds would find repetitive, complicated, boring, and tiresome. They will be useful adjuncts in making business decisions. But they will not replace human creativity, judgment, and intelligence. Anyone who trusts a computer more than an experienced human manager is taking a huge risk, because the AI is still a bright but ambitious child—at least until that particular program has twenty or thirty years of real-world experience under its belt.

Of recent concern to some creative and commercial writers is the emergence of the language processor ChatGPT, licensed by OpenAI, whose investors include Microsoft. Some people are saying that this program will replace functions like story, novel, and script writers, advertising copywriters, documentation and technical writers, and other “content creators.” Other people are saying, more pointedly, that such programs are automated plagiarism machines. People who have actually used the programs note that, while they can create plausible and readable material, they are not always to be trusted. They sometimes make stuff up when they can’t find a factual reference or a model to copy, being free to hallucinate in order to complete a sentence. They freely exercise the gift of gab. However, I expect that this tendency can be curbed with express commands to remain truthful to real-world information—if any such thing exists on an internet saturated with misinformation, disinformation, and free association.2

The reality is that ChatGPT and its cohort of language processors were created to pass the Turing Test. This was a proposal by early computer genius Alan Turing that if a machine could respond to a human interlocutor for a certain length of time in such a way that the human could not tell whether the responses were coming from another human being or a computer, then the machine would be ipso facto intelligent. That’s a conclusion I would challenge, because human intelligence represents a lot more than the ability to converse convincingly. Human brains were adapted to confront clues from the world of our senses, including sight, sound, balance (or sense of gravity and acceleration), tactile and temperature information, as well as the words spoken by other humans. Being able to integrate all this material, draw inferences, create internal patterns of thought and models of information, project consequences, and make decisions from them is a survival mechanism. We developed big brains because we could hear a rustle in the grass and imagine it was a snake or a tiger—not just to spin yarns around the campfire at night.

The Turing model of intelligence—language processing—has shaped the development of these chat programs. They analyze words and their meanings, grammar and syntax, and patterns of composition found in the universe of fiction, movie scripts, and other popular culture. They are language processors and emulators, not thought processors. And, as such, they can only copy. They cannot create anything really new, because they have no subconscious and no imaginative or projective element.

In the same way, AI develped for image processing, voice recognition, or music processing can only take a given input—a command prompt or a sample—and scan it against a database of known fields, whether photographs and graphic art, already interpreted human speech, or analyzed music samples. Again, these programs can only compare and copy. They cannot create anything new.

In every incidence to date, these AI programs are specialty machines. The language processors can only handle language, not images or music. The Watson engines must be programmed and trained in the particular kinds of data they will encounter. None of the artificial intelligences to date are multi-functional or cross-functional. They cannot work in more than one or two fields of recognizable data. They cannot encounter the world. They cannot hear a tiger in the grass. And they cannot tell a joke they haven’t heard before.

1. See, for example, my ME and The Children of Possibility series of novels.

2. The language processors also have to be prompted with commnands in order to create text. As someone who has written procedural documentation for pharmaceutical batches and genetic analysis consumables, I can tell you that it’s probably faster to observe the process steps and write them up yourself than try to describe them for an AI to put into language. And then you would have to proofread its text most carefully.