|

|

A saying favored by military strategists—although coined by Polish-American scientist and philosopher Alfred Korzybski—holds that “the map is not the territory.”1 This is a reminder that maps are made by human beings, who always interpret what they see. Like the reports of spies and postcards from vacationing tourists, the observer tends to emphasize some things and neglect or ignore others. Human bias is always a consideration.

And with maps there is the special consideration of timing. While the work of a surveyor, depending on major geographic features like mountain peaks and other benchmarks that tend to stand for thousands of years, may be reliable within a human lifespan, mapmakers are taking a snapshot in time. From one year to the next, a road may become blocked, a bridge collapse, a river change course, or a forest burn—all changing the terrain and its application to a forced march or a battle. If you doubt this, try using a decades-old gas station map to plan your next trip.

This understanding should apply doubly these days to the current penchant for computer modeling in climatology, environmental biology, and political polling. Too often, models are accepted as new data and as an accurate representation—and more often a prediction, which is worse—of a real-world situation. Unless the modeler is presenting or verifying actual new data, the model is simply manipulating existing data sources, which may themselves be subject to interpretation and verification.

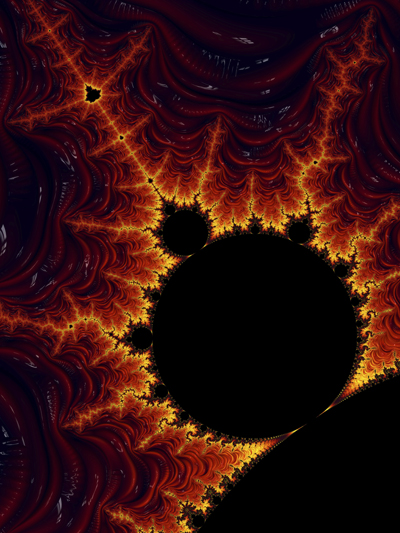

But that is not the whole problem. Any computer model, unless it becomes fiendishly complex, exists by selecting certain facts and trends over others and by making or highlighting certain assumptions while downplaying or discarding others. Model making, like drawing lines for topological contours, roads, and rivers on a map, is a matter of selection for the sake of simplicity. The only way to model the real world with complete accuracy would be to understand the situation and motion of every component, the direction and strength of every force, and the interaction and result of every encounter. The computer doesn’t exist that can do this on a worldwide scale for anything so complex and variable as weather systems; predator/prey relationships and species variation and mutation; or political preferences among a diverse population of voters and non-voters.

Computer modeling, these days—and especially in relation to climate change and its effects, or concerning political outcomes—is an effort of prediction. The goal is not so much to describe what is going on now but to foretell what will happen in the future, sometimes by a certain date in November, sometimes by the beginning of the next century. Predicting the future is an age-old dream of mankind, especially when you can be the one to know what will happen while those around you have to grope forward blindly in the dark. Think of oracles spoken only for the powerful or the practice of reading tea leaves and Tarot cards for a paying patron.

But complex systems, as history has shown, sometimes revolve around trivial and ephemeral incidents. A single volcanic eruption can change the weather over an entire hemisphere for one or several years. A surprise event in October can change or sour the views of swing voters and so affect the course of an election. The loss of a horseshoe nail can decide the fate of a king, a dynasty, and a country’s history. Small effects can have great consequences, and none of them can be predicted or modeled accurately.

When climate scientists first published the results of their models showing an average global temperature rise of about two degrees Celsius by the year 2100, the counterclaims were that they focused on carbon dioxide, a weak greenhouse gas; that the models required this gas to produce a “forcing,” or positive feedback loop, that would put more water vapor—a more potent greenhouse gas—into the atmosphere; and that the models did not consider negative feedback loops that would reduce the amount of carbon dioxide or water vapor over time. The climate scientists, as I remember, replied that their models were proprietary and could not be made public, for fear they would be copied or altered. But this defense also rendered them and their work free from inspection. Also, as I remember, no one has since attempted to measure the increase, if any, in global water vapor—not just measured in cloud cover, but also by the vapor loading or average humidity in the atmosphere as a whole—since the debate started. And you don’t hear much anymore about either the models themselves or the water vapor, just the supposed effects of the predicted warming that is supposed to be happening years ahead of its time.2

Add models that, for whatever reason, cannot be evaluated and verified to the general trend of results from scientific studies that cannot be reproduced according to the methodology and equipment cited in the published paper. Irreproducibility of results is a growing problem in the scientific world, according to the editorials I read in magazines like Science and Nature. If claims cannot be verified by people with the best will and good intentions, that does not make the originally published scientist either a liar or a villain. And there is always a bit of “noise”—static you can’t distinguish or interpret that interferes with the basic signal—in any system as vast and complex as the modern scientific enterprise taking place in academia, public and private laboratories, and industrial research facilities. Still, the issue of irreproducibility is troubling.

And, for me, it is even more troubling that reliance on computer models and projections are now accepted as basic research and scientific verification of a researcher’s hypothesis about what’s going on. At least with Tarot cards, we can examine the symbols and draw our own conclusions.

1. To which Korzybski added, “the word is not the thing”—a warning not to confuse models of reality with reality itself.

2. We also have a measured warming over the past decade or so, with peaks that supposedly exceed all previous records. But then, many of those records have since been adjusted—not only the current statement of past temperatures but also the raw data, rendering the actual record unrecoverable—to reflect changing conditions such as relocations of monitoring stations at airports and the urban “heat island” effects from asphalt parking lots and dark rooftops.

As a personal anecdote, I remember a trip we made to Phoenix back in October 2012. I was standing in the parking lot of our hotel, next to the outlet for the building’s air-conditioning system. The recorded temperature in the city that day was something over 110 degrees, but the air coming out of that huge vent was a lot hotter, more like the blast from an oven. It occurred to me that a city like Phoenix attempts to lower the temperature of almost every living and commercial space under cover by twenty or thirty degrees, which means that most of the acreage in town is spewing the same extremely hot air into the atmosphere. And I wondered how much that added load must increase the ambient temperature in the city itself.